Google has unveiled the latest version of its Tensor Processing Unit (TPU) processor line, which is only available over Google Cloud.

The TPU v4 is more than two times more powerful than the 2018-era v3 processor, Google claimed at its I/O 2022 keynote this week. The chip has now launched in preview, with a wider roll out later this year.

“TPUs are connected together into supercomputers, called pods,” CEO Sundar Pichai explained during the conference.

“A single v4 pod contains 4,096 v4 chips, and each pod has 10x the interconnect bandwidth per chip at scale, compared to any other networking technology,” he said. The previous TPU could only scale to 1,024 chips.

One pod is capable of delivering more than one exaflops of floating point performance, Pichai said. However, it should be noted that the company uses its own custom floating point format, ‘Brain Floating Point Format’ (bfloat16), to rank performance metrics – rendering comparisons to other chips difficult.

“We already have many of these deployed today, and we’ll soon have dozens of TPU v4 pods in our data centers, many of which will be operating at or near 90 percent carbon-free energy,” Pichai continued. “It’s tremendously exciting to see this pace of innovation.”

One such data center deployment is in Mayes County, Oklahoma, where Google says it is installing eight TPUv4 pods, for a total of 32,768 chips. That will give it around 9 exaflops of performance, per its own benchmark.

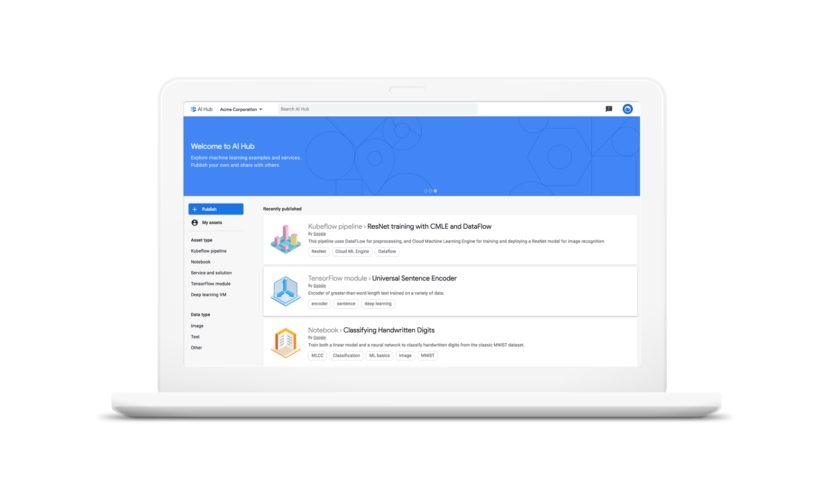

“There, we are launching the world’s largest publicly available machine learning hub for all of our Google Cloud customers,” Pichai said.

The TPU Research Cloud (TRC) program provides access to the chips at no cost to some researchers, helping establish the processor as an important tool for machine learning work.

Source: datacenterdynamics.com