The appetite to take advantage of artificial intelligence (AI) has never been greater among business leaders. According to market research firm IDC, global spending on AI and cognitive technologies is set to hit $19.1 billion this year, up 54 percent compared to 2017.

But there are limitations to address: a shortage of machine learning engineers, a general lack of knowledge over what the technology is capable of, and a dearth of appropriate tools for businesses to use.

Google launches Kubeflow Pipelines

In the eyes of Google, part of the solution is to package AI tools into a format that makes them accessible, easy to use and open to a modular, experimental approach.

Today, the search engine giant announced the roll-out of two new related products. The first is Kubeflow Pipelines, a platform designed to help businesses become more AI-focused.

As mentioned, part of the AI implementation challenge is closing the gap between data scientists, developers and management. Kubeflow Pipelines should enable greater internal collaboration.

In part, that’s because the platform aims to democratise access to AI. As well as being made available for free and open-source, Kubeflow Pipelines is open from an internal perspective.

It makes the structural layers of an AI project composable; different parts of the machine learning application building process can be snapped together like Lego bricks, according to Google Cloud senior director of product management, Rajen Sheth:

“They can just swap in the new model, keep the rest of the pipeline in place, and then see: ‘Does that new model help the output significantly?’ So it enables … rapid experimentation in a much better way,” he said.

“What we are doing with Pipelines, it can start to involve developers, it can start to involve business analysts, it can start to involve end users such that they can become part of this team that can build a Pipeline.”

Sheth argues that the platform will help companies better manage their resources and help ensure that work isn’t wasted:

One of the biggest problems we’re seeing is companies are now trying to build up teams of data scientists, but it’s such a scarce resource that unless that’s utilised well, it starts to get wasted.

“One observation we’ve seen is that in probably over 60 percent of cases, models are never deployed to production right now.”

In theory, Kubeflow Pipelines should simplify the process of coordinating complicated projects between data scientists and other team members. And, eventually, it should help push machine learning models through to production.

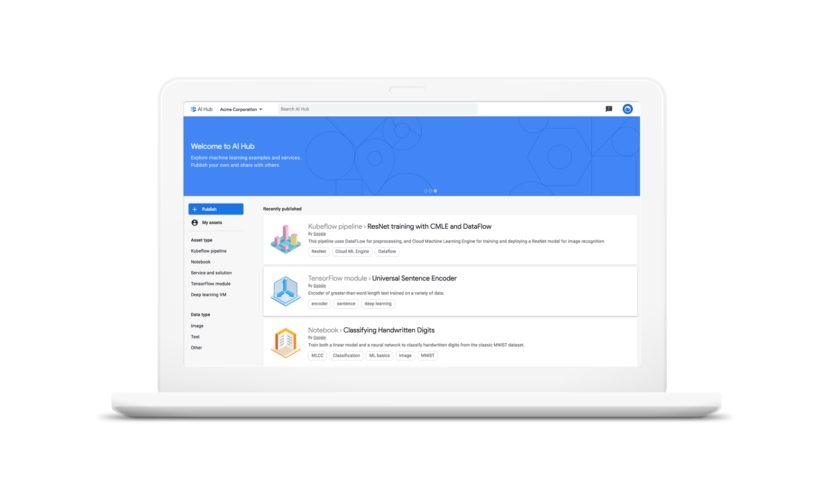

Google’s new AI Hub

The second product, AI Hub, is Google’s stab at a one-stop shop for everything AI-related. It’s a marketplace of plug-and-play AI components.

Building upon the machine learning module, TensorFlow Hub, that launched earlier this year, AI Hub will offer enterprise-grade sharing capabilities for organisations who want to privately host their AI content.

The marketplace will be stocked with resources developed by Google Cloud AI and Google Research. There are also plans to add content from Google’s Kaggle community, which is made up of over two million data scientists.

AI Hub is the foundation of what could become a significant ecosystem of related products and services.

“We eventually want AI Hub to be a place where third parties can also share information and turn it more into a marketplace over time,” said Sheth. “What we’re finding is that community could actually solve the problems of many of our customers.”

Source: www.internetofbusiness.com